The flat screen is no longer enough. We explore the transition from 2D diffusion to 3D generation, explaining how "Gaussian Splatting" is rendering the traditional polygon obsolete and building the Metaverse in real-time.

For thirty years, the video game and VFX industries have relied on a single fundamental unit: the Polygon. Every character in Fortnite, every building in Grand Theft Auto, and every dinosaur in Jurassic Park is essentially an origami shell made of thousands of tiny triangles.

This method, known as Rasterization, has served us well. But it has hit a ceiling. Polygons are rigid. They are computationally expensive to light realistically. They cannot handle hair, smoke, or transparent fluids without massive "hacks" and cheats. Most importantly, they are fundamentally hollow.

As we move into the era of Generative AI, a new technology has emerged that renders the polygon obsolete. It works less like geometry and more like a painting in mid-air. It is called 3D Gaussian Splatting, and it represents the most significant shift in computer graphics since the invention of the GPU.

Part I: The Tyranny of the Triangle (1995-2023)

To understand why the revolution is happening now, we must first understand the regime we are overthrowing. Since the days of the first PlayStation, 3D graphics have been a lie.

When you see a brick wall in a video game, you are not seeing bricks. You are seeing a flat 2D picture of bricks (a texture) plastered onto a flat geometric plane (a mesh). The computer calculates the angle of the light, checks the angle of the plane, and darkens the pixels to simulate shadow.

This process involves a massive pipeline of manual labor:

Standard Mesh Pipeline

Protocol: Legacy Rasterization // Explicit Geometry

Efficiency: 0.12%

Stage 01

Modeling

Manual vertex manipulation & mesh topology optimization.

➜

Stage 02

UV Mapping

Destructive 3D-to-2D projection (peeling logic).

➜

Stage 03

Texturing

Bit-map projection for Albedo, Normals, & Roughness.

⤸

Stage 04

Rigging

Skeleton hierarchy & weight painting (Deformation).

➜

Stage 05

Lighting

Explicit ray-box intersection & shadow baking.

Status: Deprecated

Total Labor: 40-100 Hours

System: Raster

"The polygon is an assembly language for graphics. It is too low-level. We are building cathedrals out of toothpicks."

— Graphics Engineer, SIGGRAPH 2024

The problem isn't just the labor; it's the Explicit Geometry. A polygon mesh is binary: a point is either there or not there. This makes it terrible for things that are fuzzy, semi-transparent, or complex—like hair, clouds, fire, or leaves. Game developers have spent decades inventing workarounds (transparency sorting, alpha cards, volumetric raymarching) just to hide the fact that their world is made of hard plastic triangles.

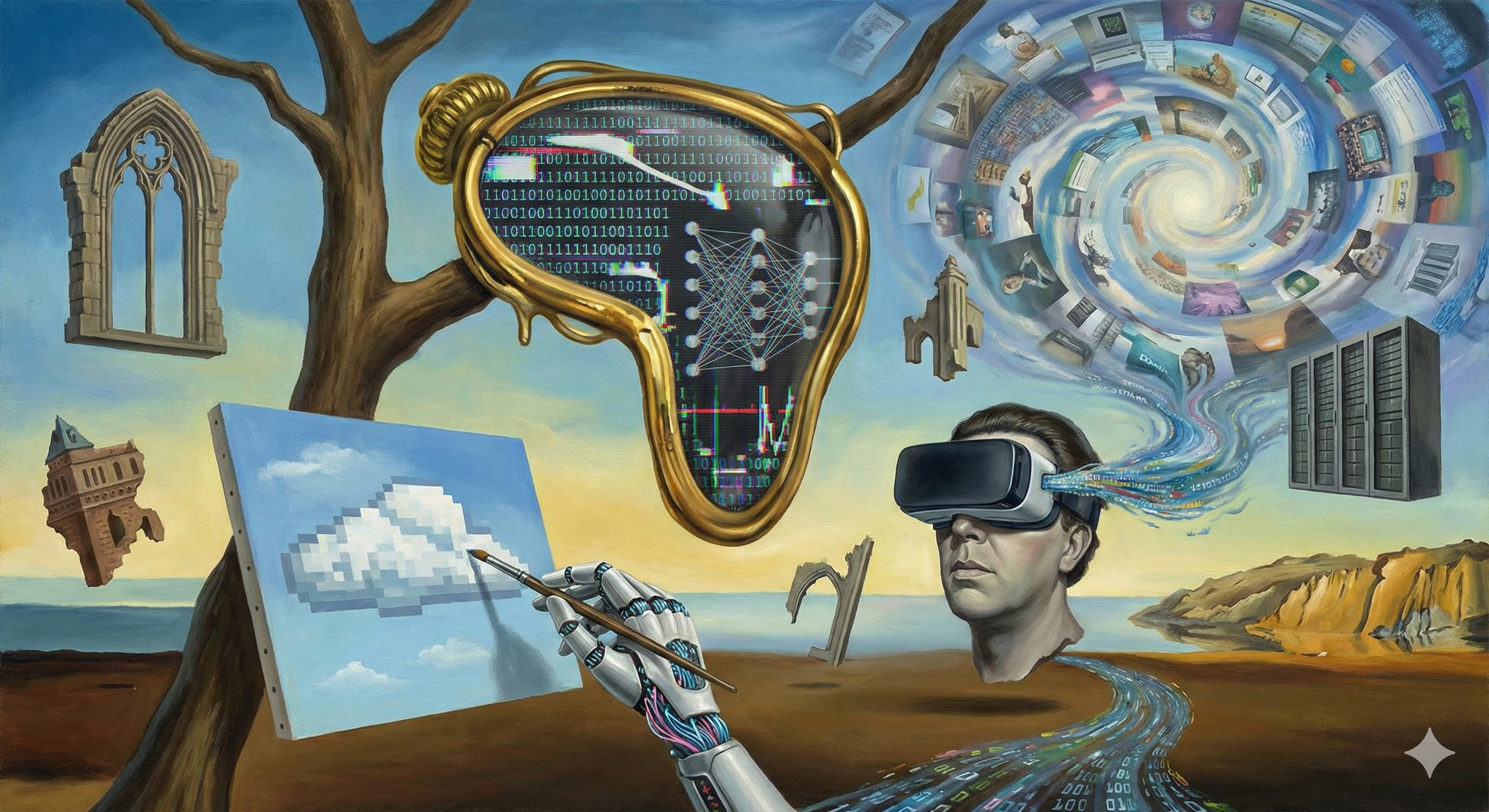

Part II: The Neural Revolution (NeRFs)

In 2020, researchers at UC Berkeley and Google shocked the world with a paper titled "NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis."

They asked a radical question: What if we don't store geometry at all?

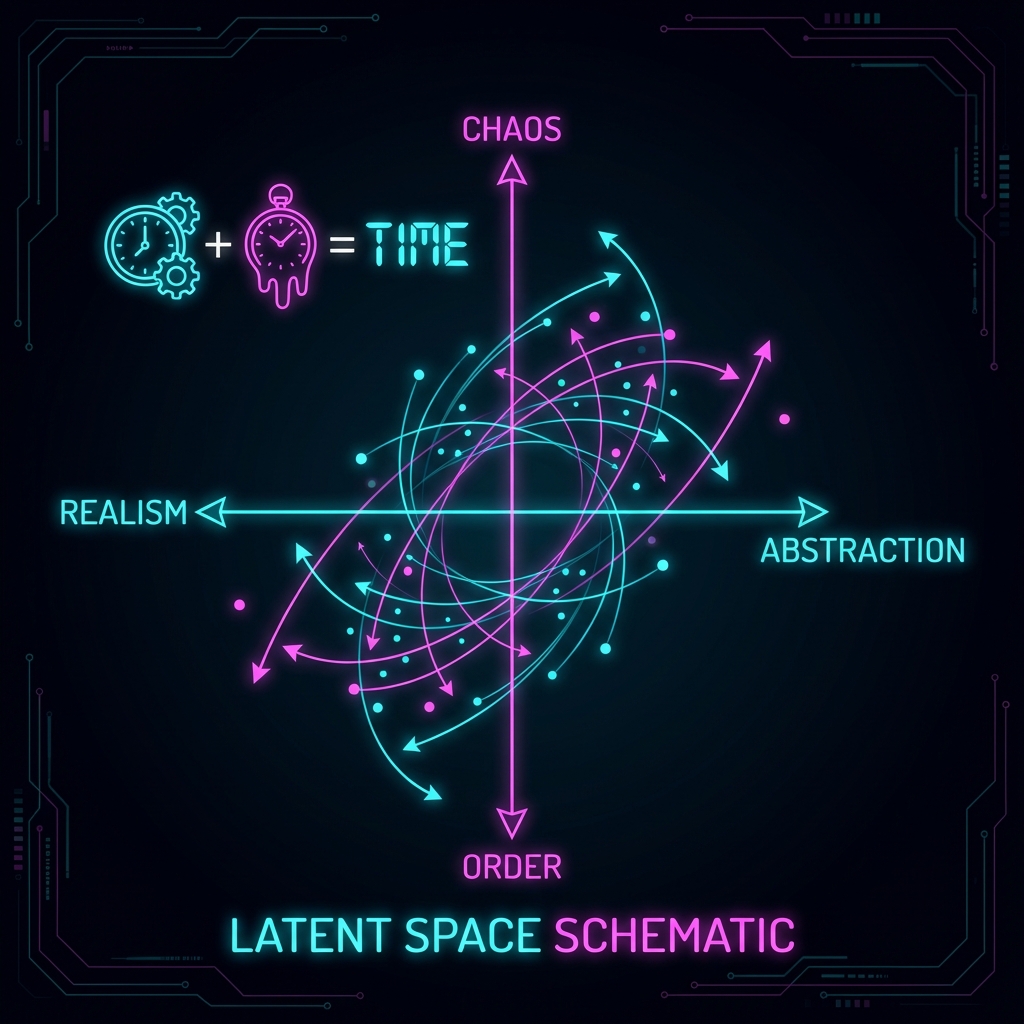

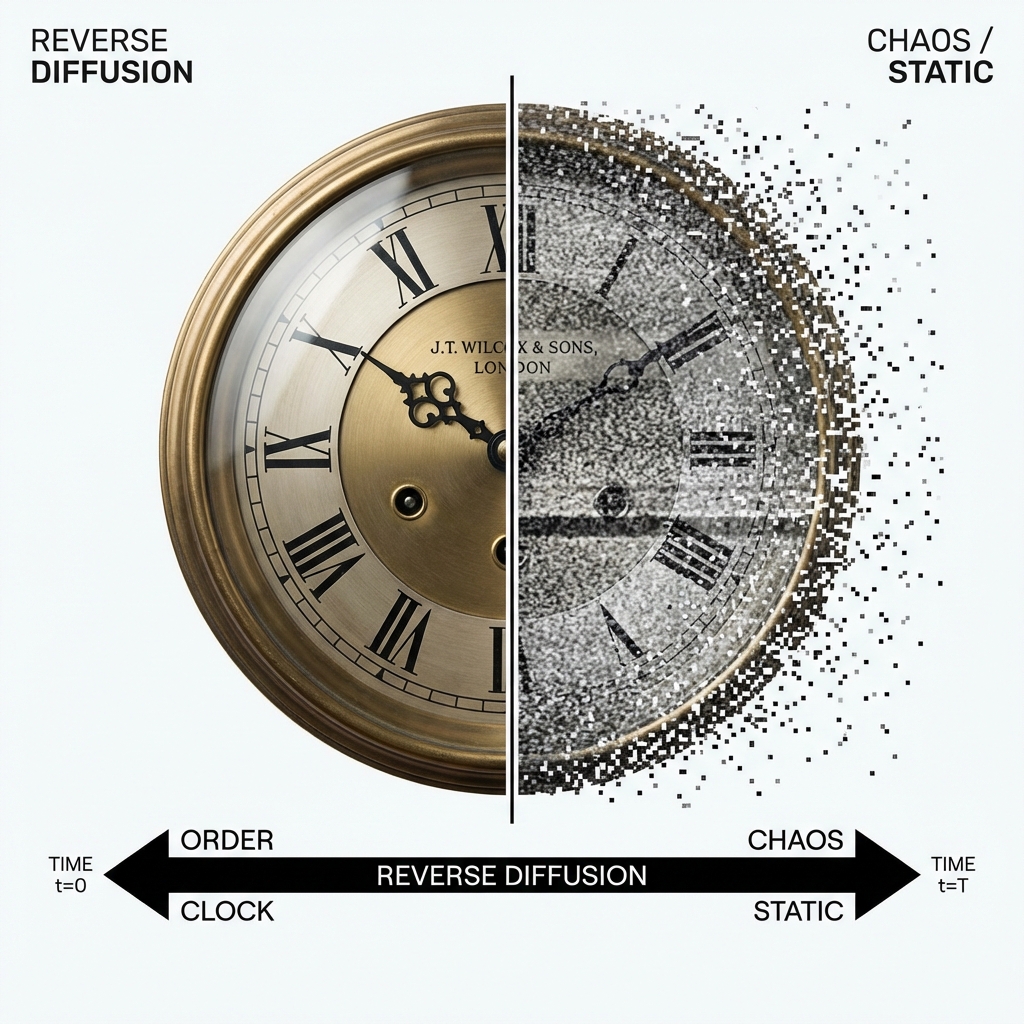

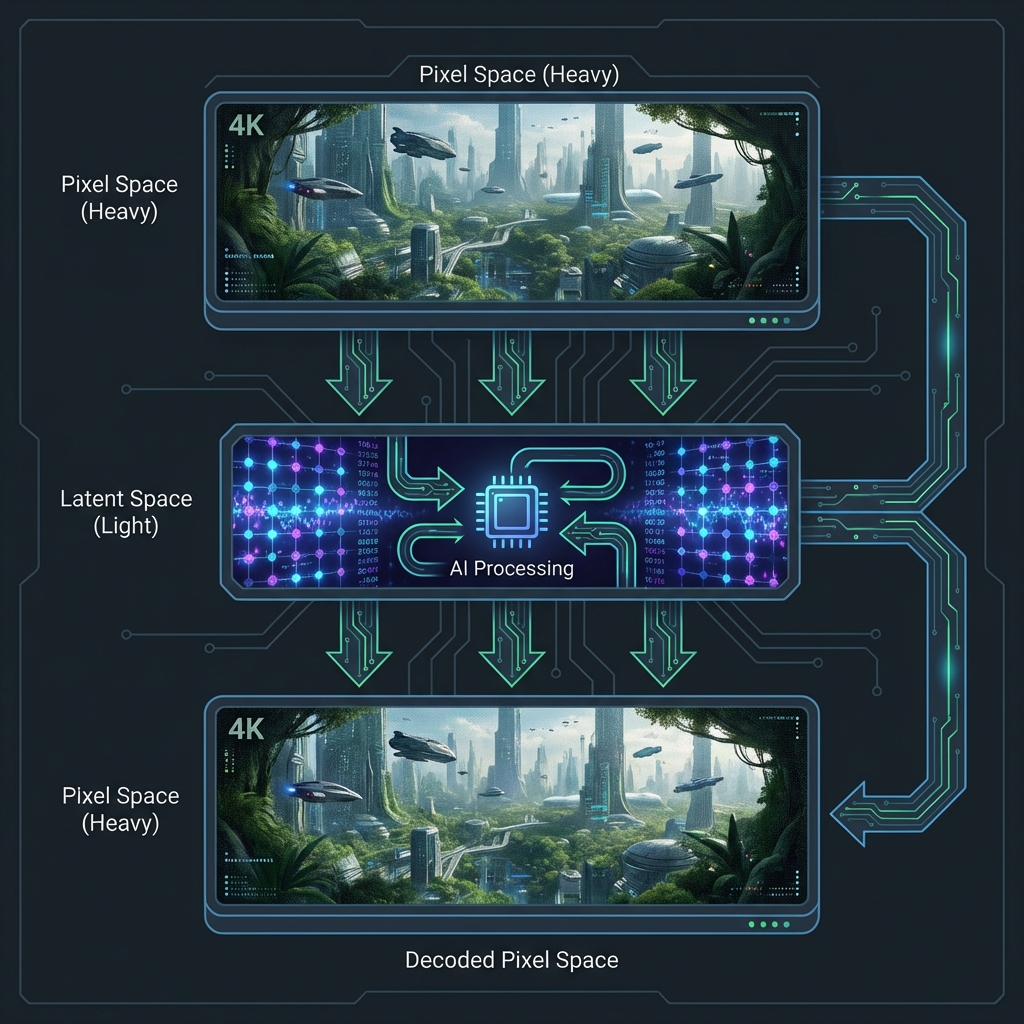

Instead of a list of triangles, a NeRF is a Function. Specifically, it is a Neural Network (a Multilayer Perceptron). You give the network a coordinate `(x, y, z)` and a viewing direction `(theta, phi)`, and it asks: "If I were standing here looking this way, what color would I see, and how dense is the fog?"

The network answers: "You see Red, and it is very dense."

To render an image, the computer shoots a ray for every pixel into the scene. It samples the neural network at hundreds of points along the ray. If the density is high, it accumulates color. If the density is 0 (empty air), it keeps going.

The result was perfect photorealism. Because it was calculating light volumetrically (like fog), it could handle reflections, glass, and hair perfectly. It didn't have "edges." It was a continuous field of density.

The problem? Speed. Querying a neural network millions of times per frame is incredibly slow. Generating a single image took seconds. It was useless for video games.

Camera Ray

(x,y,z, θ,φ)

⬇

Neural Network (MLP)

"The Black Box"

⬇

Pixel Color

(R, G, B, σ)

The "slow" process: This calculation happens for every pixel, every frame.

Part III: The King is Dead, Long Live Gaussian Splatting

In August 2023, while the world was distracted by ChatGPT, a paper from Inria (France) quietly changed the course of history: "3D Gaussian Splatting for Real-Time Radiance Field Rendering."

The researchers realized that NeRFs were right about Volumetric Rendering, but wrong about using a Neural Network to do it.

Instead of a "black box" neural network, they went back to explicit data. They represented the world as a cloud of millions of 3D Gaussians.

The Anatomy of a Splat

A "Splat" is not a point. It is an ellipsoid with 4 properties:

Covariance

Rotation & Stretch

Color

Spherical Harmonics

Imagine a fuzzy, colored oval. Now imagine 5 million of them overlapping. Because they are semi-transparent, they blend together perfectly to form smooth surfaces.

This technique combines the best of both worlds:

- Differentiable (Like NeRF): We can train it using gradient descent. We start with random points, compare the picture to a photo, and the AI math moves the points to make them match the photo.

- Rasterizable (Like Polygons): Since they are just data points (not a neural net), we can sort them and splatter them onto the screen instantly using the GPU's rasterizer.

The Trilemma of 3D

Why Gaussian Splatting is the 'Holy Grail'

NeRFs: High Quality, Slow (Pre-2025)

Polygons: Fast, Hard to Make

Gaussians: Fast, Easy, Photo-real

Part IV: Generative 3D (The Holodeck)

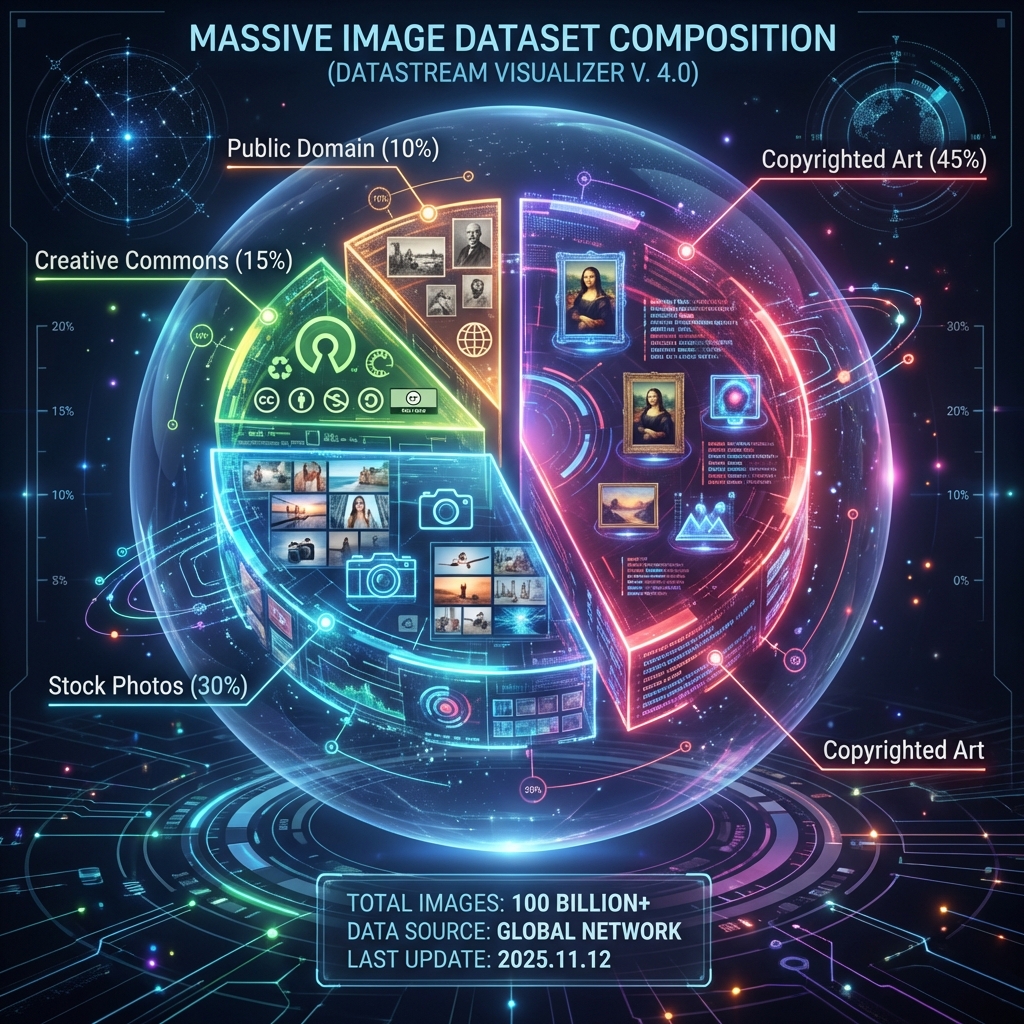

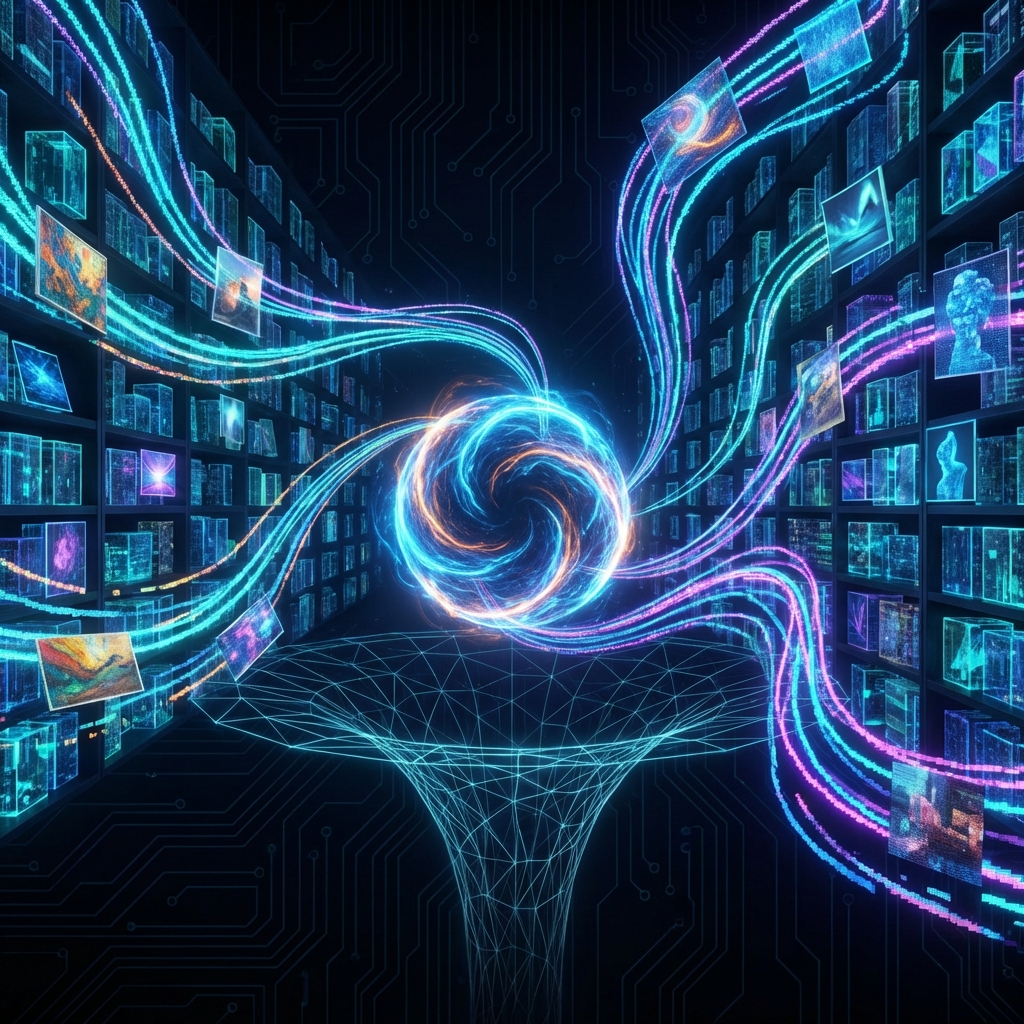

Once we have a format that is differentiable (trainable by AI), we can connect it to the massive "brains" of Large Language Models and Diffusion Models. We are moving from "Photogrammetry" (scanning real objects) to "Generative 3D" (dreaming objects).

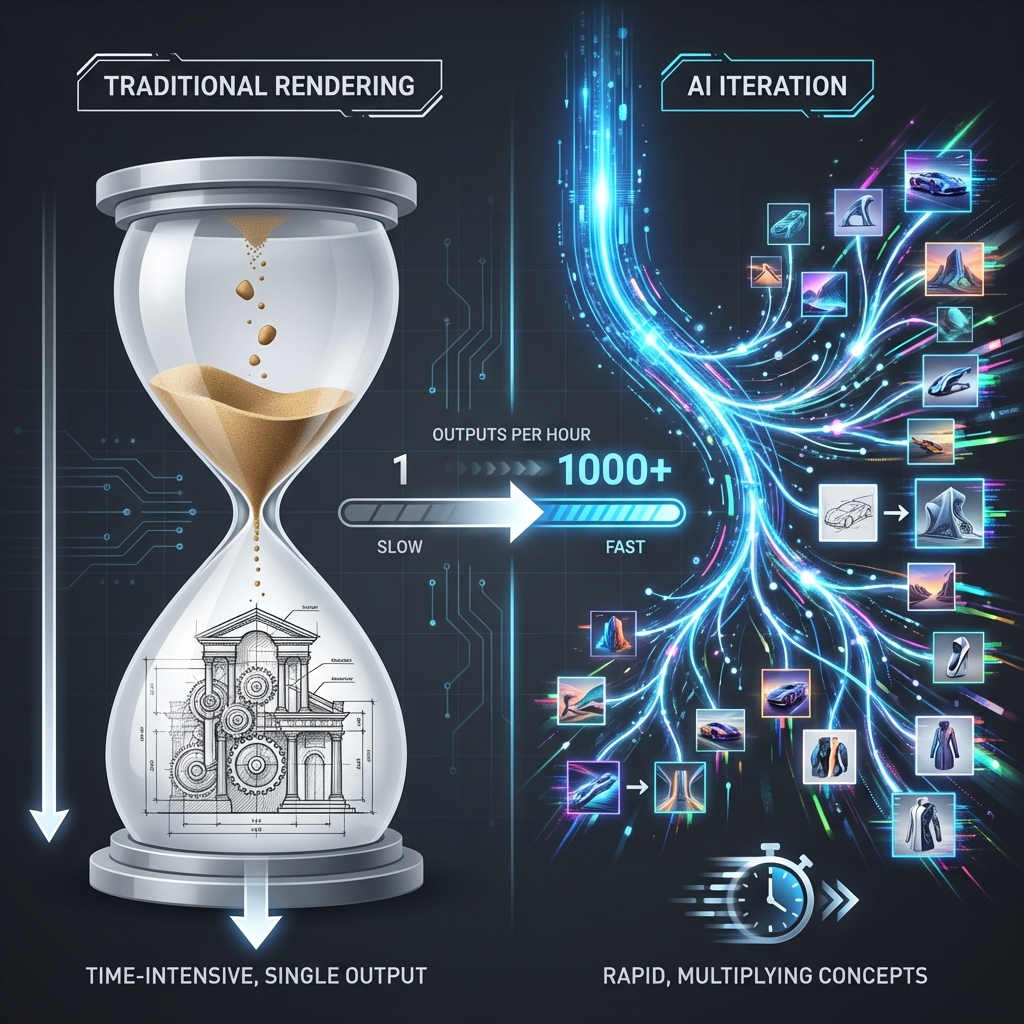

In 2024 and 2025, tools like Luma Genie, TripoSR, and Rodin emerged. They fundamentally change the asset creation pipeline.

The New Pipeline:

// 1. Prompt

User: "A rusted medieval helmet, moss growing on the visor"

// 2. Multi-View Diffusion

AI: Generates Front, Back, Left, and Right views.

// 3. LRM Reconstruction

Transformer: Guesses 3D shape from images (0.5s).

// 4. Gaussian Refinement

Optimizer: Sharpens textures and lighting (10s).

>> Result: Asset Ready for Game Engine

Luma Genie pioneered this with high fidelity, using a hybrid approach that allows for video-to-3D. TripoSR (by Stability AI) shocked the industry by doing this in under 1 second, proving that 3D generation could be instant. Rodin focused on high-poly detail, proving that AI could handle professional-grade sculpting nuances.

Part V: The Death of the Artist?

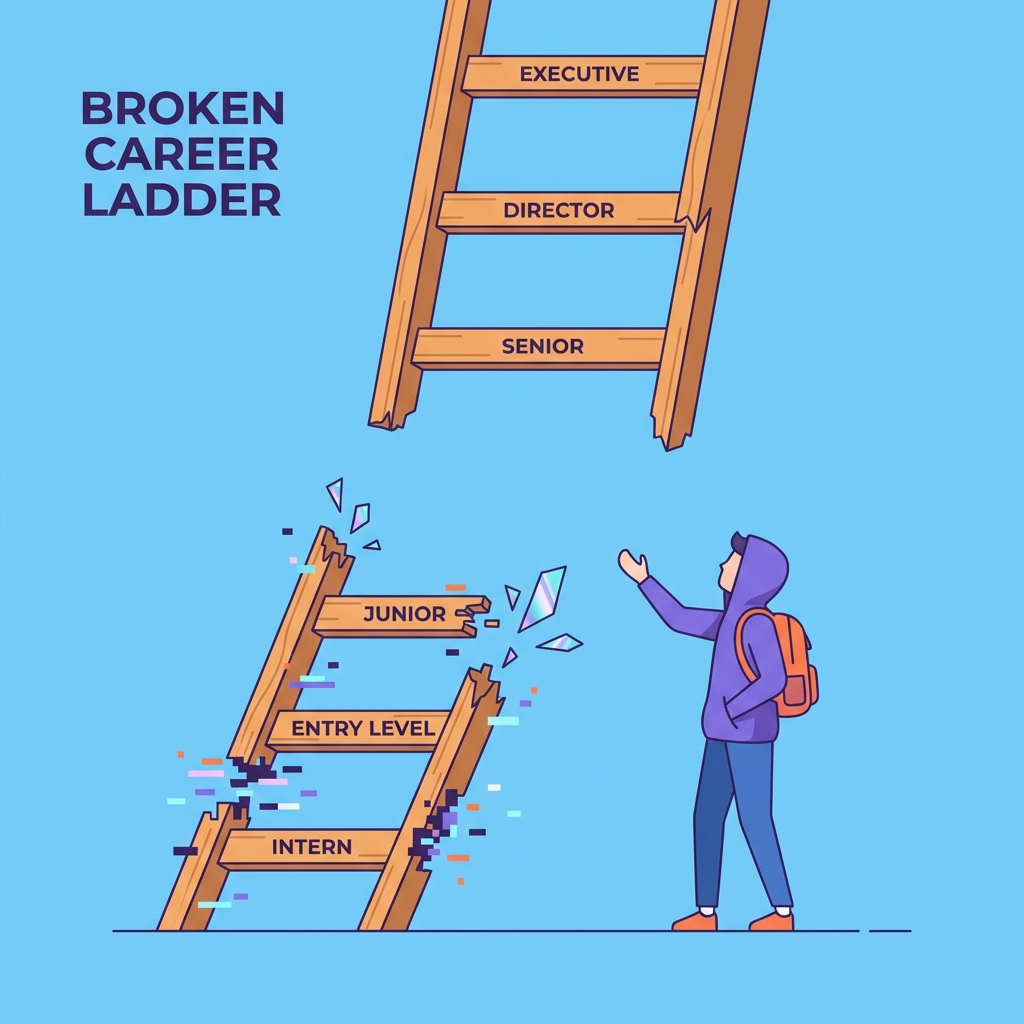

If an Art Director can type "Dystopian City Block" and get a full 3D environment in 30 seconds, what happens to the 3D modeler?

The role shifts. We are seeing the death of "the technician" and the rise of "the curator." Manual UV unwrapping, topology optimization, and retopology—these are technical chores that no artist enjoys. AI removes the chores.

🛠️

The Old Way (Technician)

- [- ] Manual Vertex Pushing

- [- ] UV Unwrapping Hell

- [- ] Retopology

🎨

The New Way (Director)

- [+] Prompt Engineering

- [+] Composition & Lighting

- [+] Narrative Direction

However, the "Intent" remains human. A generated chair is generic using the average of all chairs in the dataset. A specific chair—one with a scratch on the left leg because the character's father threw a bottle at it in 1995—requires specific direction. The artist becomes the director, composing these AI-generated assets into a coherent narrative.

Furthermore, we are moving toward Scene editing. New research allows us to "select" a cluster of Gaussians and move them. We can "paint" physics properties onto them. We are building tools that let us sculpt with light clouds rather than clay.

Part VI: The Remaining Barrier (Interaction)

The final boss is Physics. A Gaussian Splat is a ghost. It looks like a rock, but it has no "surface." In a video game, if you shoot it, the bullet flies through because there is no polygon wall to stop it.

The current solution is purely hybrid: we generate the beautiful Gaussian cloud for the Visuals, and we generate a low-resolution invisible "Collision Mesh" for the Physics. But research into "Physically Aware Diffusion" suggests that soon, the AI will simulate the physics based on the visuals alone.

It will "know" that the moss is soft and the steel is hard, calculating collision based on density fields rather than geometric planes.

The "Ghost" Problem (Interaction)

☁️

Visual Layer

Gaussian Cloud

(No Density)

🧊

Physics Layer

Collision Mesh

(Invisible)

"In today's engines, you walk on the invisible red block, but you see the blue cloud. The goal of Physically Aware Diffusion is to unify them."

Conclusion: The Infinite Canvas

We are standing at the edge of the Spatial Web. For 30 years, we looked at screens. Soon, we will look through them. The capability to generate photorealistic 3D worlds on demand, in real-time, essentially solves the "Content Problem" of the Metaverse.

It is no longer about building a level. It is about prompting a dream. The barrier to entry for world-building has dropped from "Degree in Computer Science" to "Ability to Describe a Dream." And that is a terrifying, beautiful thing.